library(bbr)

library(pmtables)

library(dplyr)

library(here)

MODEL_DIR <- here("model", "pk")

FIGURE_DIR <- here("deliv", "figure")1 Introduction

Our model run logs provide a condensed overview of the key decisions made during the model development process. They’re quick to compile and take advantage of the easy annotation features of bbr, such as the description, notes, and tags, to summarize the important features of key models.

The page demonstrates how to:

- Create and modify model run logs

- Check and compare model tags

- Pull in key diagnostics to support modeling decisions

For a walk-though on how to define, submit and annotate your models, see the Model Management. Guidance for creating model diagnostics are given on the Model Diagnostics and Parameterized Reports for Model Diagnostics pages.

2 Tools used

2.1 MetrumRG packages

bbr Manage, track, and report modeling activities, through simple R objects, with a user-friendly interface between R and NONMEM®.

pmtables Create summary tables commonly used in pharmacometrics and turn any R table into a highly customized tex table.

2.2 CRAN packages

dplyr A grammar of data manipulation.

knitr General-purpose literate programming engine.

3 Outline

During model development you often make a series of decisions regarding which models to test and which direction to take. Here we demonstrate how you can use a series of bbr functions, and the model annotation, to summarize these key choices and decisions. You can also embed this kind of model summary in an RMarkdown that can be knit throughout the project to quickly compile a well-formatted summary document you can share with other project collaborators, group leaders, etc.

4 Set up

Load required packages, any helper functions and set the file path to your model directory.

5 Create a run log

A run log table summarizing the important models is often included in a final report when the analysis is complete. However, it can also be useful along the way as a tool for reviewing the models you’ve already tried and determining what you may want to try next.

The ?run_log function reads in the annotation attached to your models (in Model Management) and some metadata that bbr tracks for you. By default, it finds all models in the directory that you pass it and can optionally search recursively in sub-directories.

log_df <- run_log(MODEL_DIR)

names(log_df) [1] "absolute_model_path" "run" "yaml_md5"

[4] "model_type" "description" "bbi_args"

[7] "based_on" "tags" "notes"

[10] "star" You can explore this tibble with View(log_df) in Rstudio or you could use dplyr to select the columns to display, and then knitr::kable() to create a well formatted HTML table to include in your knit document.

log_df %>%

select(run, based_on, description, tags, notes) %>%

collapse_to_string(tags, notes) %>% knitr::kable()| run | based_on | description | tags | notes |

|---|---|---|---|---|

| 100 | NULL | NA | one-compartment + absorption, ETA-CL, ETA-KA, ETA-V, proportional RUV | systematic bias, explore alternate compartmental structure |

| 101 | 100 | NA | two-compartment + absorption, ETA-CL, ETA-KA, ETA-V2, proportional RUV | eta-V2 shows correlation with weight, consider adding allometric weight |

| 102 | 101 | Base Model | two-compartment + absorption, ETA-CL, ETA-KA, ETA-V2, CLWT-allo, V2WT-allo, QWT-allo, V3WT-allo, proportional RUV | Allometric scaling with weight reduces eta-V2 correlation with weight. Will consider additional RUV structures, Proportional RUV performed best over additive (103) and combined (104) |

| 103 | 102 | NA | two-compartment + absorption, ETA-CL, ETA-KA, ETA-V2, CLWT-allo, V2WT-allo, QWT-allo, V3WT-allo, additive RUV | Additive only RUV performs poorly and will not consider going forward. |

| 104 | 102 | NA | two-compartment + absorption, ETA-CL, ETA-KA, ETA-V2, CLWT-allo, V2WT-allo, QWT-allo, V3WT-allo, combined RUV | Combined RUV performs only slightly better than proportional only, should look at parameter estimates for 104, CI for Additive error parameter contains zero and will not be used going forward |

| 105 | 102 | NA | two-compartment + absorption, ETA-CL, ETA-KA, ETA-V2, CLWT-allo, V2WT-allo, QWT-allo, V3WT-allo, CLEGFR, CLAGE, proportional RUV | Pre-specified covariate model |

| 106 | 105 | Final Model | two-compartment + absorption, ETA-CL, ETA-KA, ETA-V2, CLWT-allo, V2WT-allo, QWT-allo, V3WT-allo, CLEGFR, CLAGE, CLALB, proportional RUV | Client was interested in adding Albumin to CL |

| 999 | 106 | NA | NA | Simulation run |

The ?collapse_to_string function is a simple formatting helper to combine the vector of notes or tags into a single string for better display.

6 Check your model and data files

bbr records the state of your control stream and input data at the time the model was run. This allows you to easily check if either the model or data file has changed since the model was run. This is useful for catching models that may need to be re-run.

The bbr::check_up_to_date() function is described in more detail in the Checking if models are up-to-date section of the “Getting Started” vignette. Here we use it for its side effect of printing a warning if any model or data files in the run log models have changed.

# check if model or data files have changed (from bbr)

check_up_to_date(log_df)7 Add diagnostics to your run log

The bbr::summary_log() function extracts some basic diagnostics from a batch of models by running ?summary_log (discussed in more detail in Model Management) on each model. It finds and extracts elements of those model summaries into a tibble with one model per row. This function is discussed in more detail in the Creating summary log vignette).

These diagnostic columns can be appended to your run log using add_summary() as follows:

log_df <- add_summary(log_df)

log_df %>%

select(run, ofv, param_count, any_heuristics) %>% knitr::kable()| run | ofv | param_count | any_heuristics |

|---|---|---|---|

| 100 | 33502.96 | 10 | TRUE |

| 101 | 31185.58 | 12 | FALSE |

| 102 | 30997.91 | 12 | FALSE |

| 103 | 36411.27 | 12 | FALSE |

| 104 | 30997.71 | 13 | FALSE |

| 105 | 30928.82 | 14 | FALSE |

| 106 | 30904.41 | 15 | FALSE |

| 999 | NA | NA | NA |

Using bbr::add_summary() adds roughly 20 columns to your log_df tibble (details on all the columns can be found in ?summary_log). Here we select only the objective function value (ofv), count of non-fixed parameters (param_count), and the boolean flag indicating if any heuristics were triggered (any_heuristics).

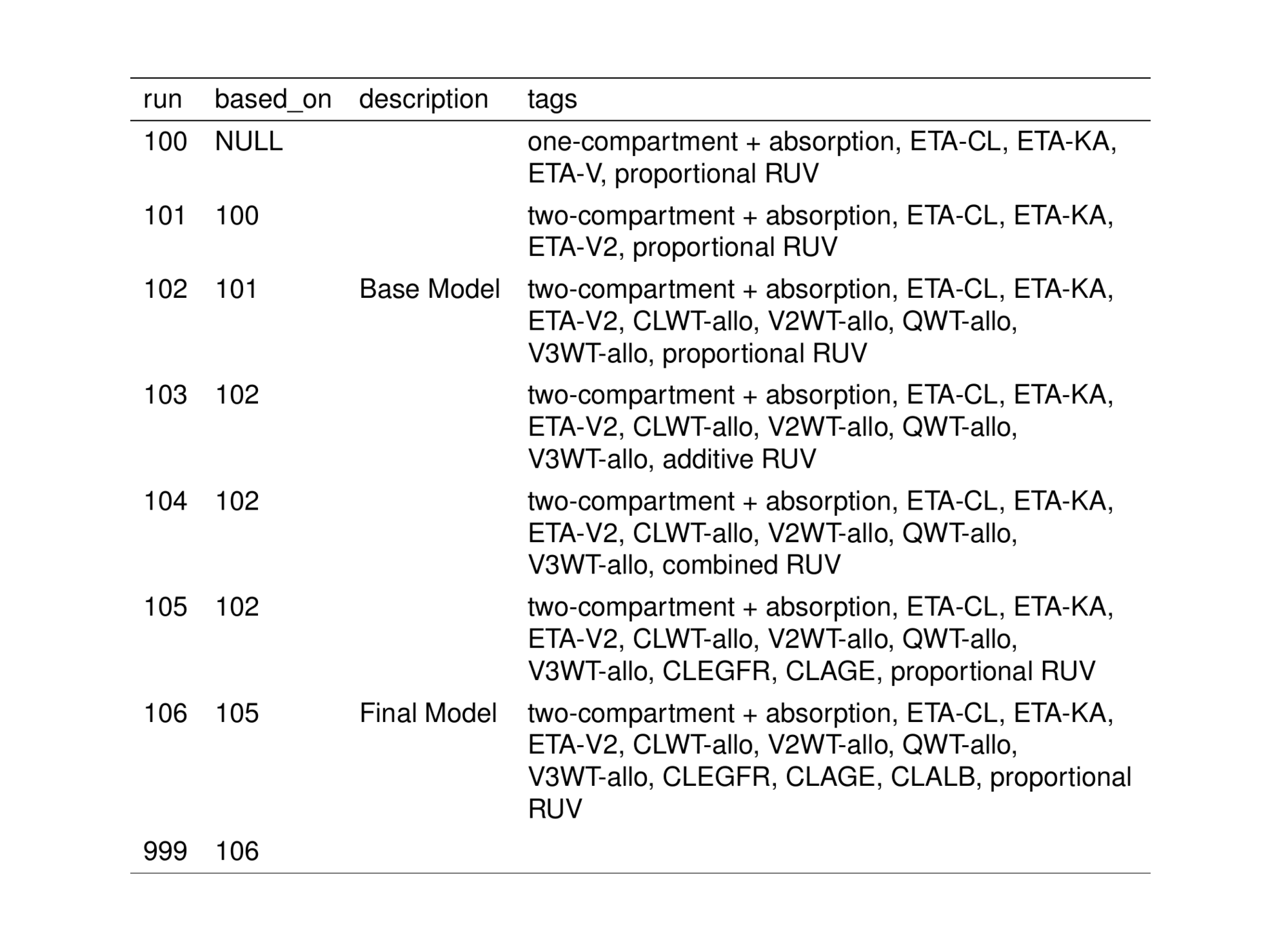

9 Creating a report-ready run log table

While a run log can be useful for checking the state of a project, they’re also often included in the final report. MetrumRGs reports are written in Latex and report-ready run log tables are created by passing the run log tibble to the pmtables package:

log_df %>%

select(run, based_on, description, tags) %>%

collapse_to_string(tags) %>%

st_new() %>%

st_left(tags = col_ragged(8)) %>% # fix column width for text-wrapping

st_as_image()